Cephyr¶

Cephyr is a storage system running Ceph.

Originally it was run on the following hardware:

- In total 18 servers with a 4x25GBit/s Ethernet network and a total storage area of 2.0PiB. More specific:

- 6 Storage servers with Intel Xeon Silver 4216 CPU @ 2.10GHz and 384GB of RAM.

- 7 Storage servers with Intel Xeon Silver 4114 CPU @ 2.20GHz and 96GB of RAM.

- 3 Metadata servers with Intel Xeon Bronze 3104 CPU @ 1.70GHz and 48GB of RAM.

- 2 Gateway servers with Intel Xeon Bronze 3106 CPU @ 1.70GHz and 96GB of RAM.

The storage above was split between Swedish Science Cloud, dCache at Swestore, and a user and group storage area connected to the clusters.

Currently the /cephyr file-system itself is migrated to new hardware consisting off:

- 14 Lenovo SR630v2 servers with:

- 2 x Intel Xeon Silver 4314 16c 2.3GHz Processors, 256 GB memory

- 2 x M.2 5300 480GB SSD (Mirrored for OS)

- 3 x 800GB NVMe PCIe 4.0 (for Ceph journal and database)

- 1 x Mellanox ConnectX-6 100 Gb 2-port Ethernet adapter

- 1 x Mellanox ConnectX-6 10/25 Gb 2-Port Ethernet adapter

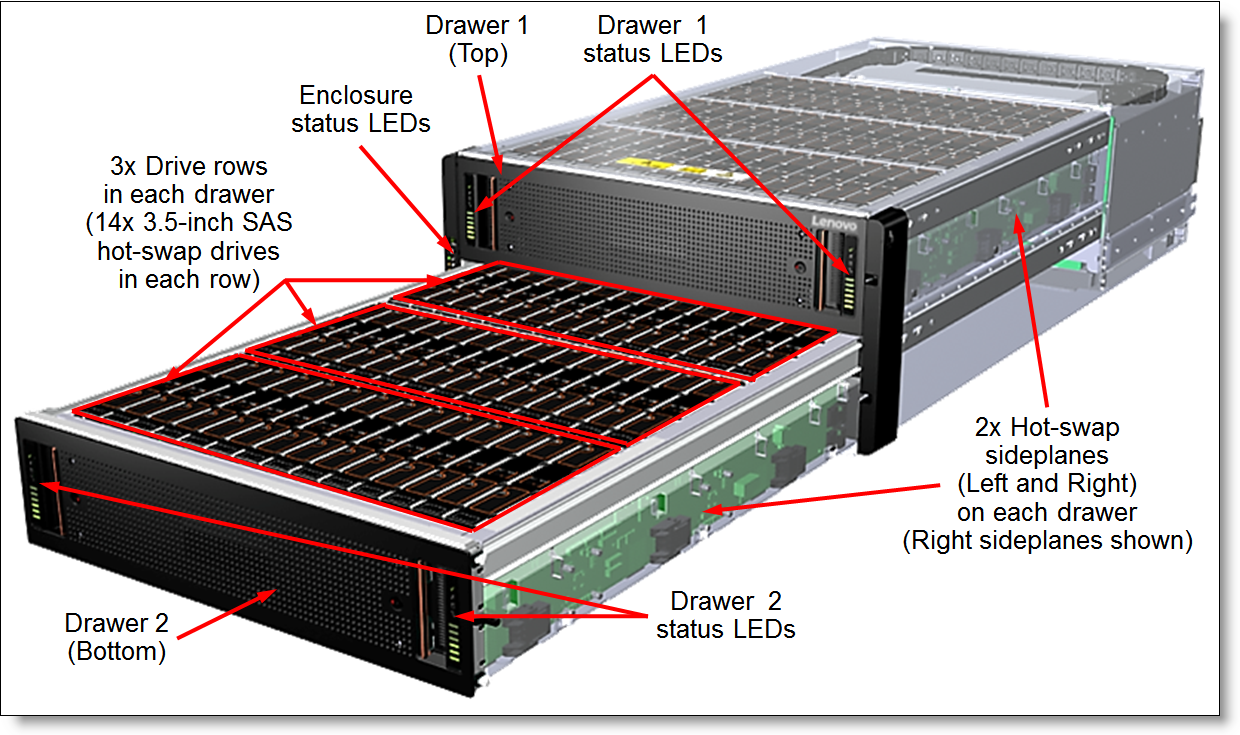

- 7 Lenovo D3284 JBOD with 84x 14TB SAS HDD Drives

- Two servers connect to one JBOD

- A total of 8232 TB raw / 6860 TB usable capacity (shared with the Mimer Bulk tier)

Applications for group storage are managed through the SUPR

Storage rounds. The group storage is

mounted under /cephyr, and a compute project is required for access.

Project Storage Decommissioning¶

See Parent Page